It’s been a while…

October 25, 2012 Leave a comment

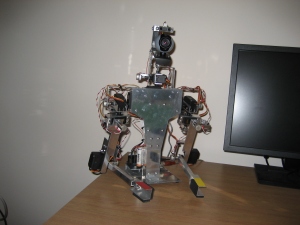

It’s been too long since my last post, sorry about that! I’ve had a lot of non-robot related stuff going on, but I have managed to make some progress. I’ve modified my robots head to include a sharp GP2D12 IR sensor to allow the robot some basic depth perception. This required fabrication of a new head bracket and some trial and error to get the sensor positioned correctly. The first attempt used a mini servo to move the IR sensor up and down. I figured that if an object is close to the camera then the IR sensor would need to be angled further down in order to be looking straight at the object. On the other hand if the object is further away, the IR sensor needs to be angled slightly higher to see the object. I fabricated this set-up but found through some experimentation that the horizontal beam of the IR sensor is wide enough to pick up an object near the centre of the cameras field of view anywhere within the usable range of the IR sensor. As such, this first design went in the bin and I fabricated a new head bracket with the IR sensor mounted above the camera with some adjustment to alter the angle. Have a look at the picture below to see the final design.

The IR sensor gives an analogue output relating to distance of the object from the sensor. The Arduino code was modified to read the analogue input pin that the sensor is connected to, scale the value and then output this via serial with all the other data from the potentiometers.

On the software side of things, a lot has changed. I started playing around with the Raspberry Pi, initially using qtonpi. This seemed to have a lot of potential but at this stage appears to be in early stages of development. Being a bit lazy I didn’t feel the effort required was worthy. I may revisit this in the future. I decided to use the recommended Raspian distribution instead, which works pretty much out of the box. I also decided to delve into the world of Python programming due to the strong links between it and the Raspberry Pi.

On the back of this, and in an attempt to get to grips with using Linux, I’ve replaced windows on my desktop PC with Ubuntu. I’ve re-written a lot of my code in Python and built OpenCV with the Python bindings. I have to admit, I think Ubuntu is great and I’m getting on really well with Python. I created a GUI using TKinter and used Python serial libraries to allow the servos of my robot to be controlled from the PC. The program only has basic functionality at the moment but only took a few days to write. The longest part of the process was building OpenCV with all the required dependencies and getting to grips using it in a Python program. Check out the screen shot below to see the program running. I’ve implemented colour tracking in OpenCV by converting the webcam image to HSV and applying a threshold to give a greyscale image. The centre of the coloured object is then identified using moments. The 10 blue sliders on the GUI set the desired position of the servos and the edit box below each one shows the actual position received from the potentiometer. The other 6, green sliders allow the OpenCV threshold values to be altered to identify different colours.

I did run into one problem using OpenCV moments with Python. I had a major memory leak when trying to find the moments of the image. I think this was to do with converting an iplimage to Mat, which was required before the image is passed to cv.moments. I ended up converting the image to a numpy array before passing it to the newer cv2.moments function, which worked just as well and got rid of the memory leak. Took me two days to get to the bottom of that one!

I also built OpenCV on the Raspberry Pi, which takes about 10 hours to complete! I’ve tried running my Python software on the Raspberry Pi. The GUI on its own will run well and allow control of the servos and display their actual position. However, when image display and processing is added into the mix, the Pi seems to struggle. The problem seems to be a delay in getting the image data from the camera as opposed to the Pi struggling with the processing side of things. I didn’t manage to get more than about 2 frames per second and making any more progress using the Pi was hard work. As a result the Pi is back in its box for the moment and I’m continuing development using Ubuntu on my desktop.

As you can see, I’ve made a few changes to the tools I’m using to develop the software for my robot. I was a decision that was surprisingly difficult to make. I like QT and the C++ language and was really starting to get to grips with it. You can make some great looking GUI’s and I’ve had a play with QML and this opens up more possibilities for custom, great looking interfaces. However, due to that fact that I’m not a professional programmer and I tend to dip in and out of programming as and when I get time, it would take me a long time to implement new functionality in my programs. Python on the other hand allows new functionality to be added quickly, meaning a new idea or hardware addition can be included with out too much fuss. I’ve seen comments that Python runs slower than a complied language but I can’t say that I’ve noticed any real slow down when the program is running, although I appreciate that my program is fairly basic at the moment. I think I’m going to stick with Python for the moment while the robot is very much in development.

My next step is to add a visual representation of the reading from the IR sensor to the GUI and do a bit more testing. I also need to add the head tracking functionality to my Python code along with the ability to program moves and replay them. Hopefully it won’t be quite as long between posts for anyone following my progress. I’ll leave you with a picture of the whole robot with his new head. See you soon!